Geraud

Nangue Tasse

Welcome!

I am a Ph.D. student in Computer Science at the University of the Witwatersrand (Wits), advised by Professor Benjamin Rosman. I am also a member of the RAIL Lab research group. Before moving to Wits for my M.Sc, I did my B.Sc in Computer Science, Pure&Applied Mathematics and Physics at Rhodes University.

News

- December 2022: Talk at the Deep Learning IndabaX South Africa in Pretoria

- October 2022: Talk at UCT's SHOCKLAB

- August 2022: Lecturing a Computational Intelligence MSc course

- July 2022: Talk at Brown's robotics lab

- June 2022: New RLDM paper on World Value Functions

- May 2022: Summer PhD research internship at IBM Thomas J. Watson Research Center in New York

- January 2022: New ICLR paper on Generalisation in Lifelong RL

- September 2021: Workshop paper at AAAI Fall Symposium on AI for HRI

- August 2021: Teaching assistant at neuromatch

- April 2021: Won the IBM PhD Fellowship

Interests

I have always been fascinated by the immense potential for good of creating intelligent systems---the pinnacle of which is artificial general intelligence (AGI). My main research interests lie in reinforcement learning (RL) since it is the subfield of machine learning with the most potential for achieving AGI (through embodied agents that leverage the other AI fields where appropriate).

I am currently interested in techniques that allow an agent to leverage past knowledge to solve new tasks quickly. In particular, I focus on composition techniques. These allow an agent to leverage existing skills to build complex, novel behaviors. During my M.Sc research, I introduced a formal framework for specifying tasks using logic operators (AND, OR, NOT), and how to similarly apply them on learned skills to generate provably optimal solutions to new tasks. Not only does this allow for safer and interpretable reward specification---since any new task reward function can be composed of well-understood components---it also leads to a combinatorial explosion in an agent’s ability. These are particularly important for agents in a multitask or lifelong setting.

Here are a couple longterm milestones I think are needed on the road to AGI, and which I am working towards:

- A provably general knowledge representation for transfer learning in RL, but not too general (it needs to be sufficiently constrained to be practical).

- Principled methods for efficiently learning base skills with said knowledge represention.

- Principled methods for agents to do any computation over skills with no/minimal further learning. That is, creating agents that are Turing complete in skill space.

Featured Research

|

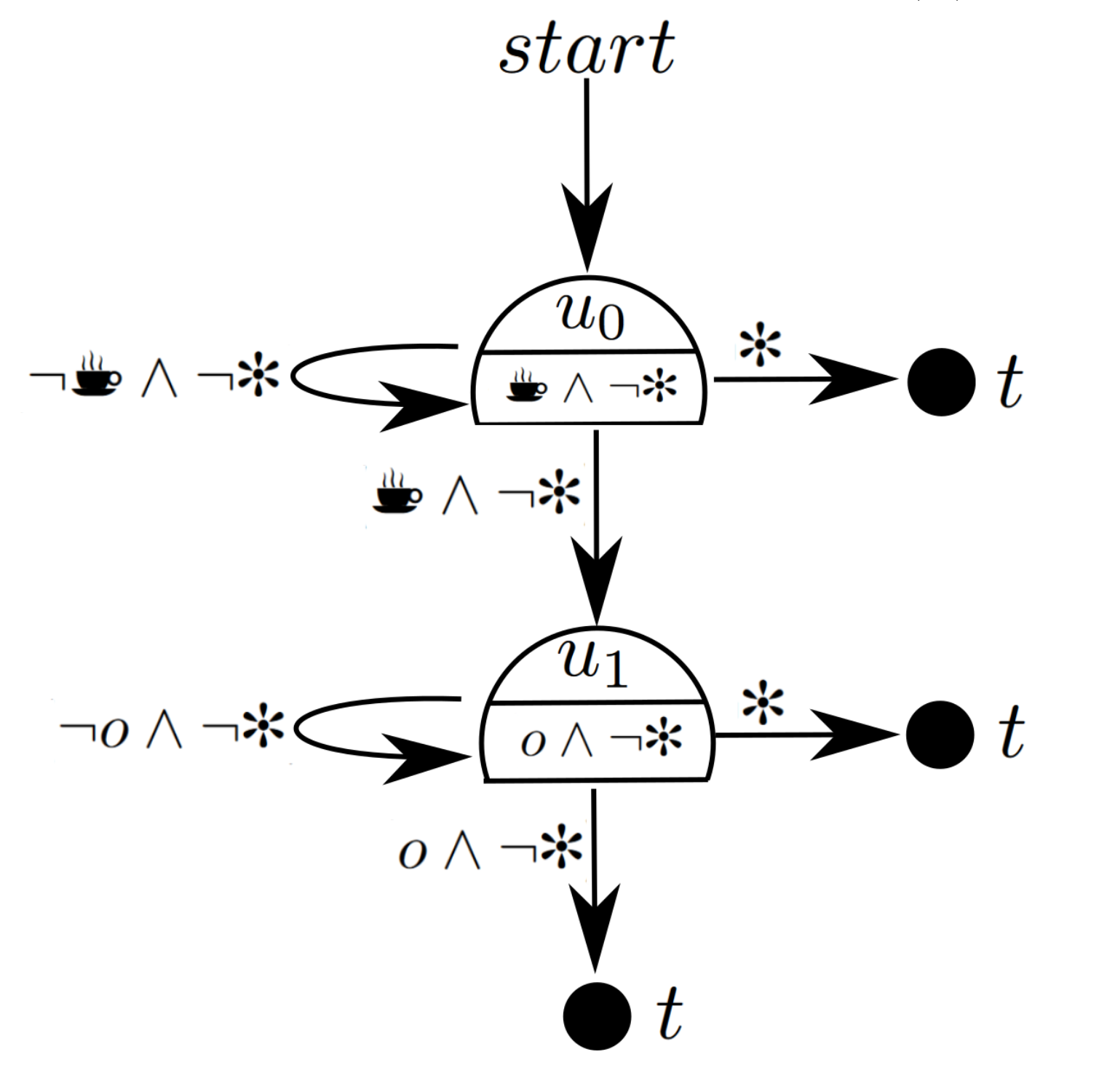

Skill Machines: Temporal Logic Composition in Reinforcement Learning

Poster @ DeepRL @ NeurIPS 2022; Lifelong learning @ IROS 2022;

A framework where an agent first learns a set of base skills in a reward-free setting,

and then combines these skills with the learned skill machine to produce composite

behaviours specified by any regular language, such as linear temporal logics.

Joint work with Steven James and Benjamin Rosman.

|

|

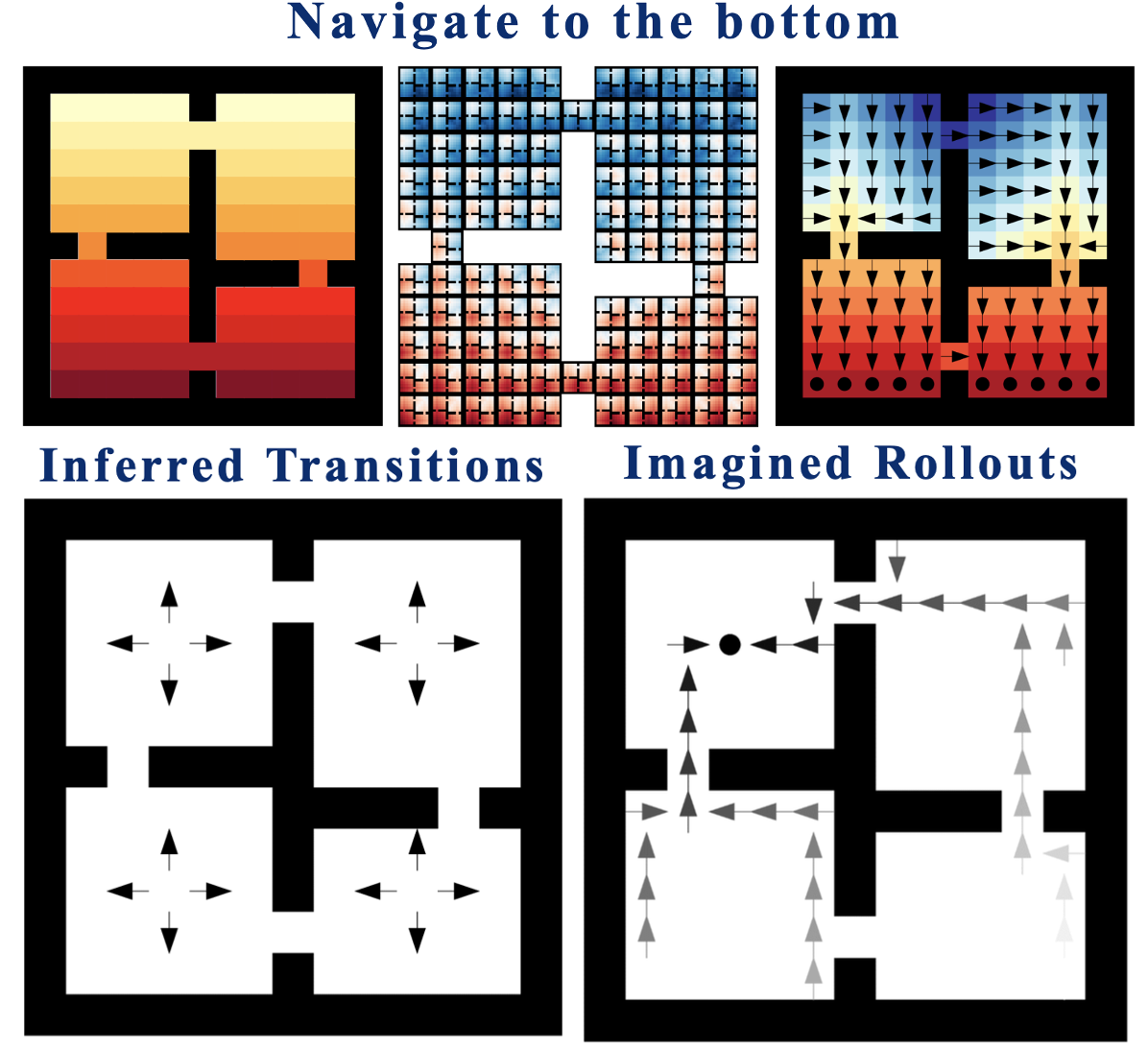

A general value function with mastery of the world (provably) that encodes the solution to the current task

and has downstream zero-shot abilities.

Joint work with Steven James and Benjamin Rosman.

|

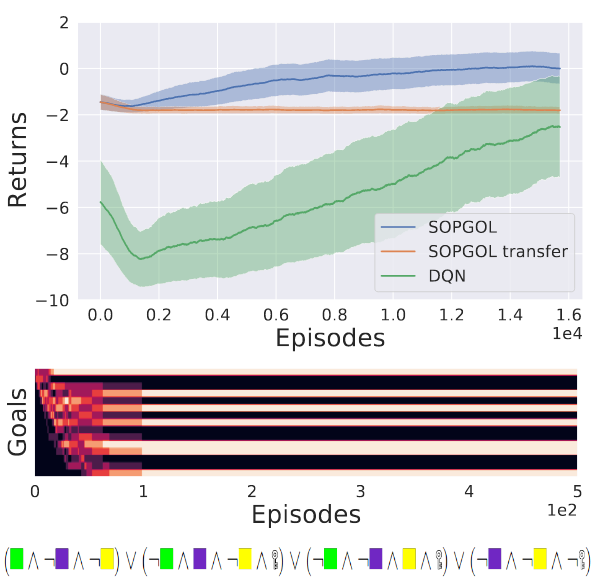

|

A framework with theoretical guarantees for an agent to quickly generalize

over a task space by autonomously determining whether a new task can be solved

zero-shot using existing skills, or whether a task-specific skill should be learned few-shot.

Joint work with Steven James and Benjamin Rosman.

|

|

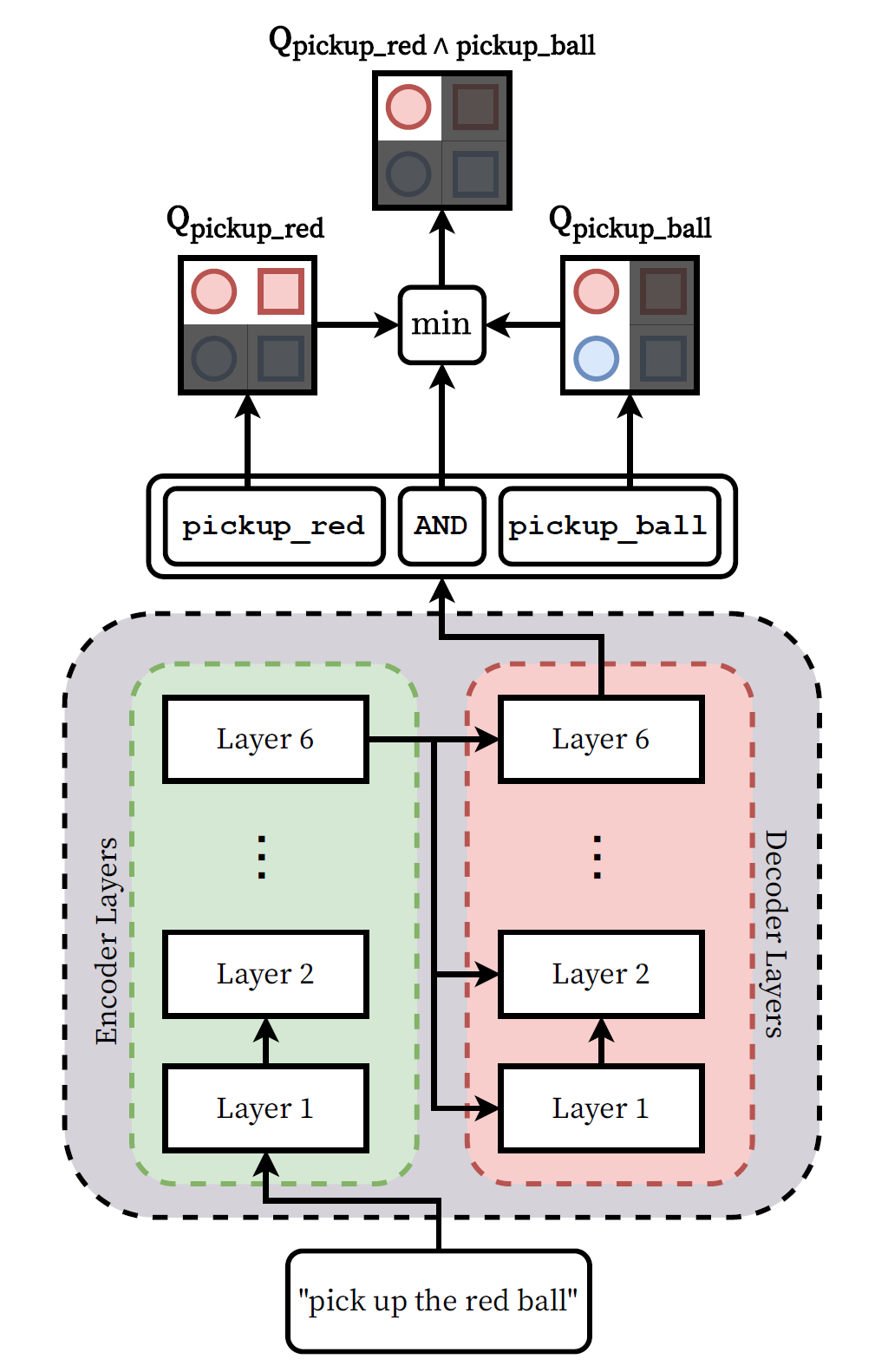

Learning to Follow Language Instructions with Compositional Policies

AAAI Fall Symposium on AI for HRI, 2021

We propose a framework that learns to execute natural language

instructions in an environment consisting of goalreaching

tasks that share features of their task descriptions.

Our approach leverages the compositionality of both value

functions and language, with the aim of reducing the sample

complexity of learning novel tasks.

Led by Vanya Cohen, joint with myself, Nakul Gopalan, Steven James, Matthew Gombolay, and Benjamin Rosman.

|

|

A Task Algebra For Agents In Reinforcement Learning

M.Sc Thesis, 2020

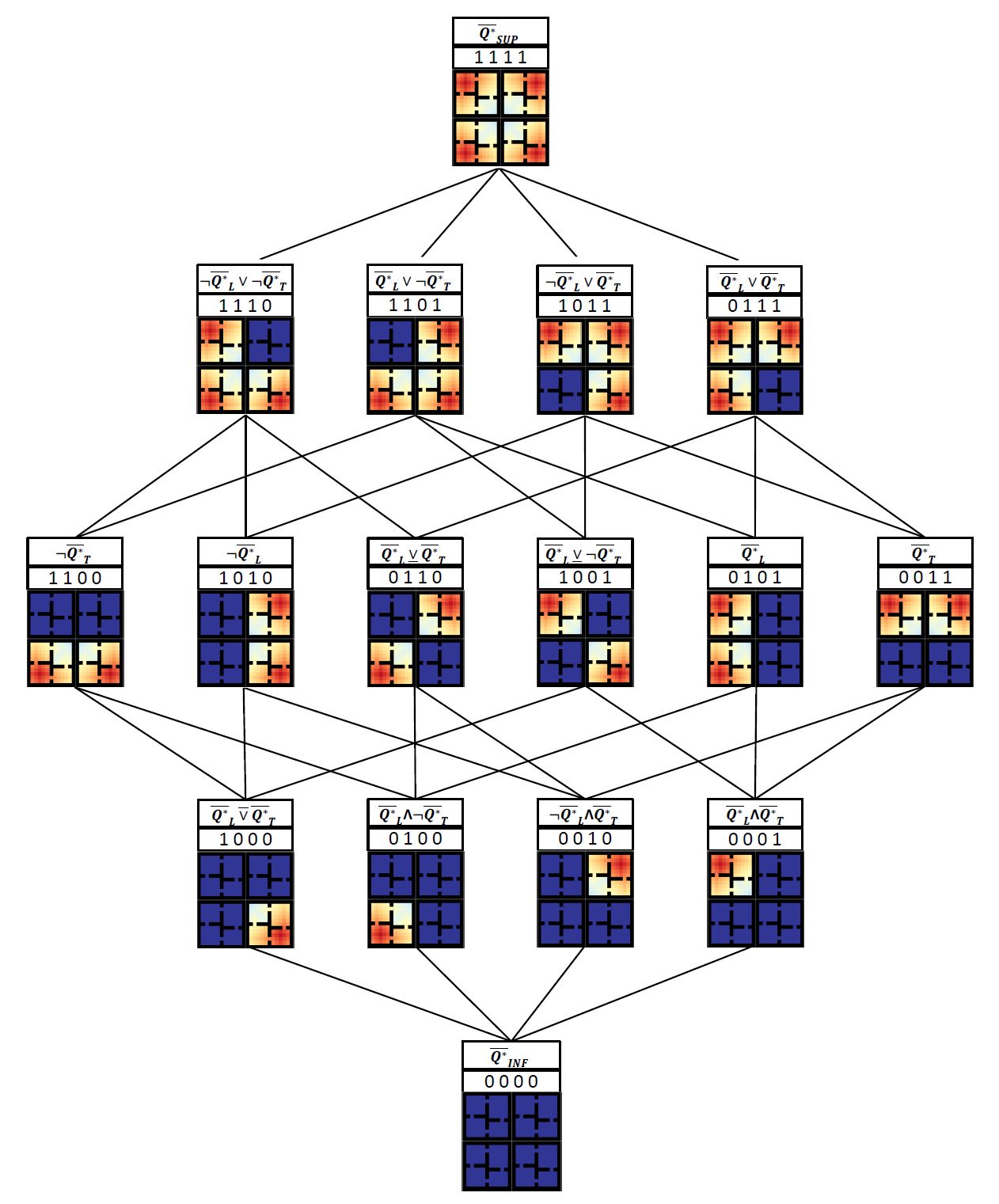

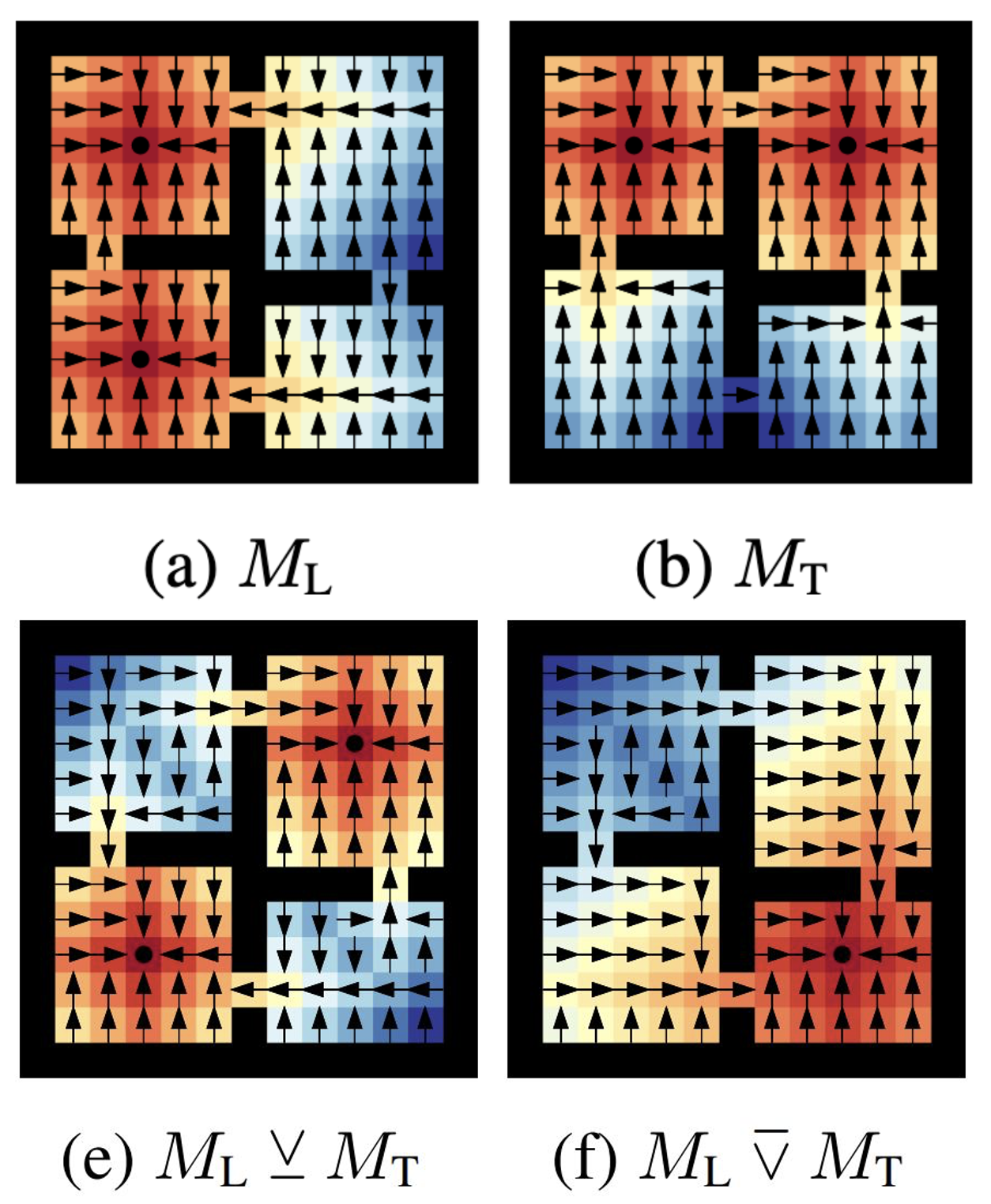

We propose a framework for defining lattice algebras and Boolean

algebras in particular over the space of tasks. This allows us to formulate new tasks in terms of the negation,

disjunction, and conjunction of a set of base tasks. We then show that by learning a new type of goal-oriented value

functions and restricting the rewards of the tasks, an agent can solve composite tasks with no further learning.

Advised by Steven James and Benjamin Rosman.

|

|

We propose a framework for defining a Boolean algebra over the space of tasks.

This allows us to formulate new tasks in terms of the negation, disjunction and

conjunction of a set of base tasks. We then show that by learning goal-oriented

value functions and restricting the transition dynamics of the tasks, an agent can

solve these new tasks with no further learning. We prove that by composing these

value functions in specific ways, we immediately recover the optimal policies for

all tasks expressible under the Boolean algebra

Joint work with Steven James and Benjamin Rosman.

|

|

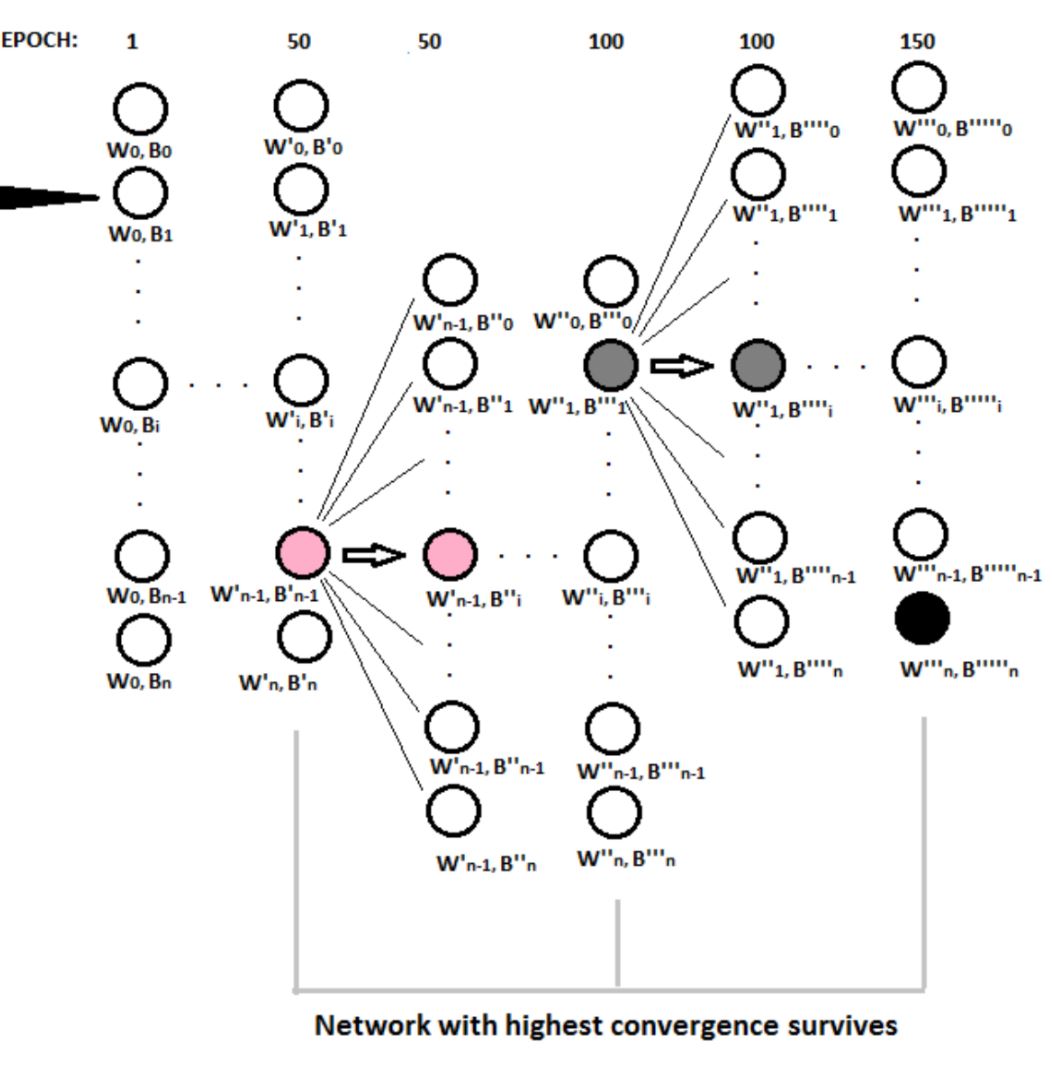

Hypersearch: A Parallel Training Approach For Improving Neural Networks Performance

Poster @ BAI @ NeurIPS 2019; B.Sc.H Thesis, 2018

This work proposes a novel embarrassingly parallel algorithm, Hypersearch, that takes advantage of both worlds:

hyperparameter optimization and parallel programming. Hypersearch works by training multiple neural networks

of same architecture but different hyperparameters in parallel while optimizing both networks and hyperparameters.

Advised by James Connan.

|

Personal

Reality: Shows me a new quality anime;

Me: Now this, does put a smile on my face.

When I am not procrastinating on current research work with other fun ideas, I am doing trash drawings.

Feel free to contact me via email, Twitter, or Linkedin.